Introduction

Before we dive in, it’s important to note that this piece was published on January 9th 2023 exclusively for GMI clients. I am publishing several chapters today for readers of Short Excerpts from Global Macro Investor (All of you!) as we continue through a precarious, volatile year. Any trade recommendations have been removed.

Like I noted in the video, here are the links for you to learn more and receive your special offers to both GMI and Real Vision's Pro Macro.

GMI: https://rvtv.io/GMIOffer

Pro Macro: https://rvtv.io/ProMacro

You can also visit this link if you’d rather read the report in PDF format.

Ok, let's get into this...

Well, this year marks eighteen years of writing Global Macro Investor; I can't quite believe where all the time has gone! What a hell of a journey it's been. In this Think Piece I cover the full story of GMI.

If you are new to Global Macro Investor, the January Monthly is traditionally presented in a completely different format to the rest of the year: the comprehensive annual January Think Piece. The idea is to encourage us all to step back, think about things a little differently, learn something new and enjoy reading an eclectic selection of articles on a wide range of topics that will hopefully inspire you.

I also like to review the previous year and set some expectations for the year ahead. I hope you find it useful and interesting.

A Review of 2022

It sucked. End.

To be fair, we called the business cycle spot on, all the way back in early 2022. We have been calling for a recession in early 2023 since then.

Overall, we mainly sat on our hands during the year and traded very little. The overriding idea was to use the coming recession to get into our long-term themes, or to add to them.

We started adding Exponential Age stocks in the summer, which was too early as ever! But this is a long-term theme, and we are looking to add further in the coming weeks. More on this in a bit.

We also added to our core long-term crypto holdings into the big sell-off in June. We got the timing about right in ETH, but SOL got caught in the FTX fallout and fell sharply. More on SOL later too...

I have made it very clear over the course of the year why I chose not to trade around our core crypto bets and prefer the buy, hold and add strategy and I will continue to do just that.

We were too early in bonds (again! I am always too early!) but we added into recent weakness and will add more as things unfold. The Fed want to be stickier on rates than in previous cycles so we will have to be patient.

On the trading side, we did very nicely in FX, nailing the strong dollar and getting out at the right time. At least one trade went right...

The core trades overall were pretty much a wash – bonds lost money while FX and carbon made money. Thank goodness for $/JPY which helped offset the bonds!

The long-term portfolio was a horror story, but one which was broadly expected and acceptable (although still painful). The biggest trade by far – ETH – is still up 400% from our entry. As a guideline, my weightings have been 80% ETH, 20% other stuff. My views are still as strong as they have ever been, and I have been adding into weakness. I have an outstanding buy order in ETH (not yet filled) and will add to SOL later in this publication. Keep going...

If you strip out the long-term portfolio it was a “meh” year – neither here nor there. I tend not to look at the long-term portfolio on a yearly basis as it fucks with your mind. It is a five year plus view, so I have to look at it in those terms.

The Markets Theme for 2023

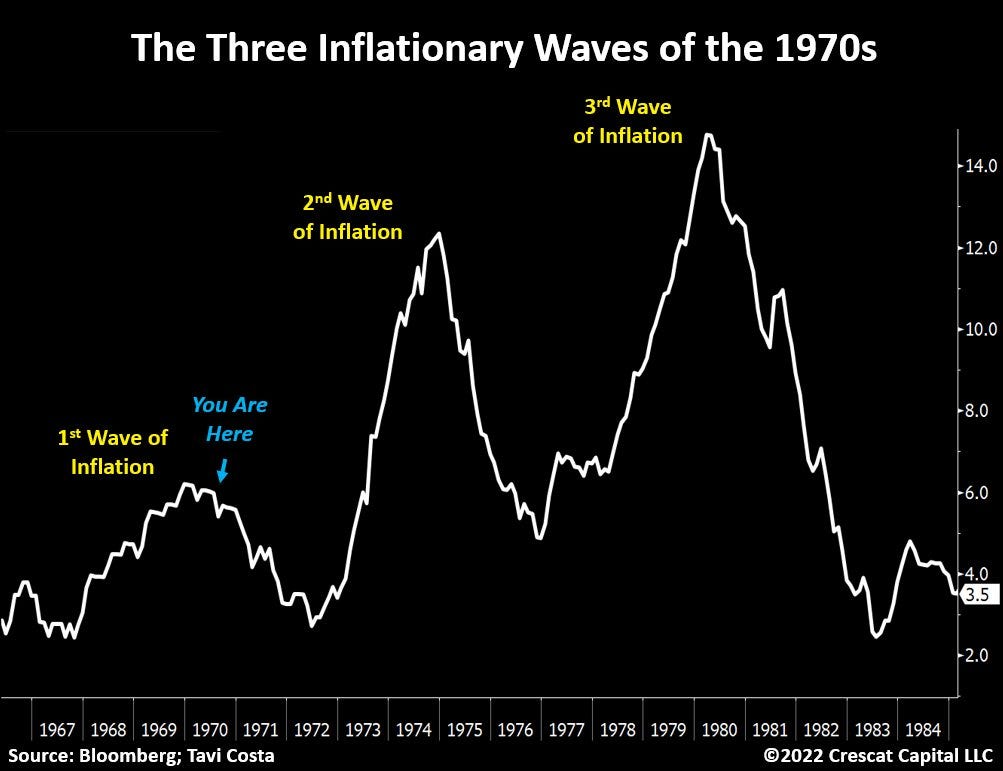

Market participants are utterly convinced that we are going to see a re-run of the 1970s. The Fed are also fearful of the 1970s. If you remember, the ‘70s were one of the very few times in history where inflation fell during a recession and then picked up again afterwards, going to new highs.

Everyone is utterly convinced that deglobalisation will create higher goods prices; sticky prices and rising commodities will add to this due to underinvestment.

This chart hits my Twitter feed. Every. Single. Day.

The other 100% consensus idea is that equities must fall much further due to earnings falling. Again, this is posted on my Twitter feed probably ten times a day.

Add to this the fact that tech is apparently dead and there is going to be a bear market or a non-performer for next five years AND crypto is deceased.

That is the core view of the market.

But what doesn’t square with me is that the fed holds the same view and fear of the 1970s, and therefore say that they will not cut rates fast but will push them higher. So, if the Fed are fighting the rising inflation view, then how can it happen?

Hmmm...

Meanwhile, our GMI views are probably the most divergent they have ever been versus broad consensus:

GMI Themes for 2023

We think that the US economy is just entering recession...

... but we think it will be a short recession. Our Financial Conditions Index is rising fast...

We think that China is leading the cycle and liquidity is coming...

... and that is driving up the OECD Lead Indicator...

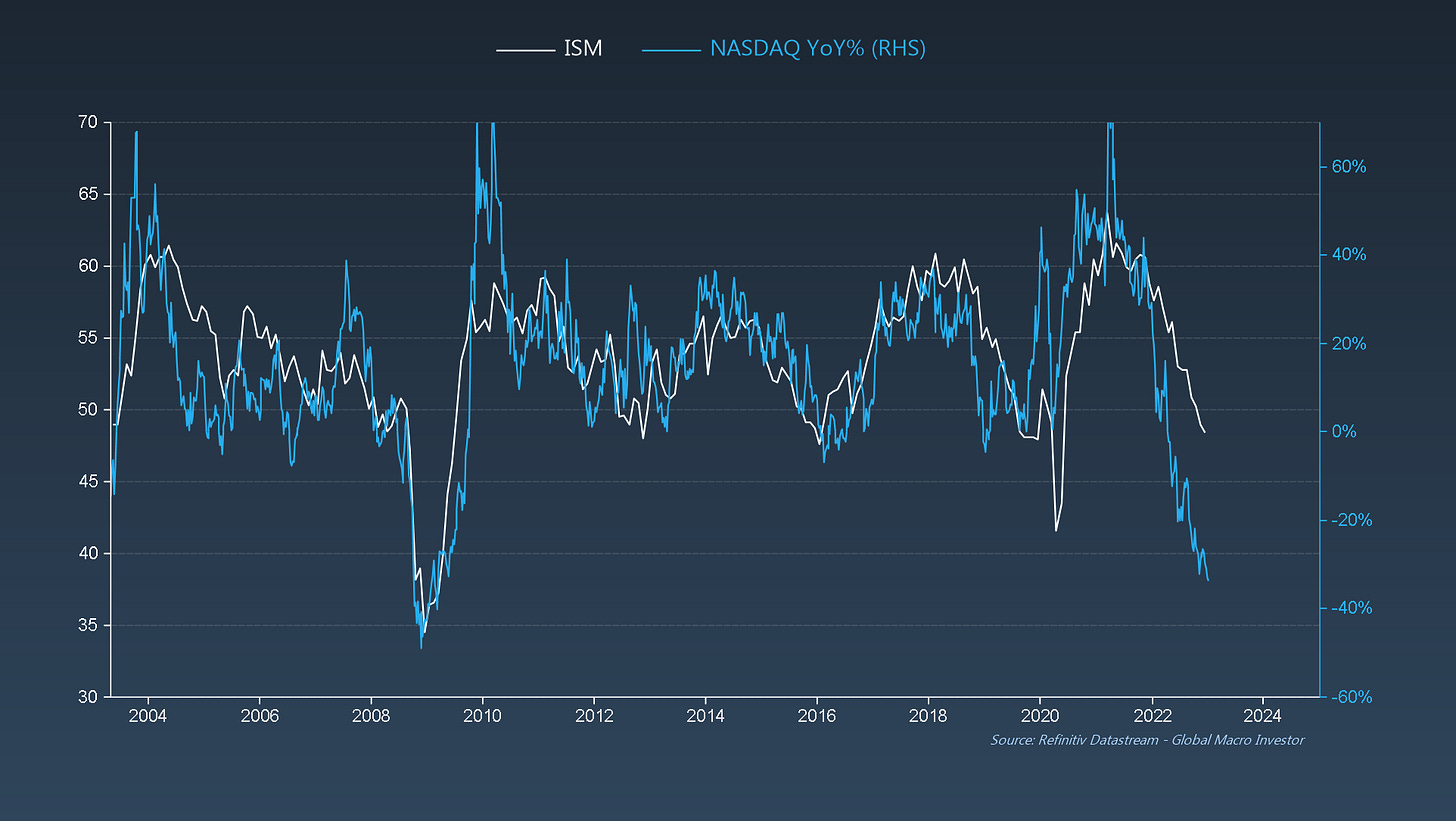

The economy is going to feel really ugly from here on out to the summer. However, we think that the worst, if not all, is priced in. The SPX is pricing ISM at 43...

... the NDX is pricing ISM at 38...

... and Exponential Age stocks are pricing in ISM at 36...

However, bonds are following the Fed’s, “We are never, ever, ever going to cut” narrative...

I think bonds will be the traditional macro trade of the year in 2023 as they converge with inflation expectations. 5-year inflation expectations in the next couple of months will trade well below 2%...

We think inflation evaporates... to zero and below over 2023...

... and we think the Central Banks have misread the inflation pressures...

This is how we see inflation play out, worst case: a return to 2%...

But our base case remains for inflation to go negative and for a lower inflation peak in the next business cycle...

... and we assume that equities will likely follow the path of all post-inflation episodes...

The consensus narrative is that the markets haven’t yet taken enough pain. This is because people just look at equities but, when you take bonds into account, it was twice as bad as in 2008 in dollar terms, and marginally worse in the percentage of Global GDP terms.

By every measure, this was one of the worst years for assets in all history. I’d hate to be a pensioner...

... and sentiment is HORRIFIC...

... truly awful...

... beyond terrible...

... miserable...

... catastrophic...

... and everyone is as short as fuck...

However, I do concede that it’s tough to call whether the equity market makes a significant new low or not. I’m not ready to pull the trigger on equities outside my secular Exponential Age theme.

But that being said, if we do see a move lower over the next four weeks, we will have an incredible cluster of weekly 9 and 13 counts. I count two 9s and six 13s, and another 13 will come at the end of Jan/beginning of Feb...

And finally, crypto is being held down by Global M2 YoY... and that is turning up due to China...

... and M2 growth is about to accelerate...

A turn in global liquidity will bring a turn in risk assets from technology to crypto. It is coming...

A Simple Plan for 2023

Our plan for Q1 2023 is pretty simple.

Using our clear framework to dovetail secular themes and tie them to business cycle entry points, we want to use any further weakness to buy crypto and buy Exponential Age equities.

I am strongly of the belief that in six months’ time the world will be a very different place – zero inflation and economic recovery.

We will also likely add some core bets in carbon, bonds, maybe copper and anything else that takes our shorter-term interest during the year.

The Crypto Plan for 2023

The plan here is pretty simple too. If ETH breaks the top of this channel we buy. If ETH hits the bottom of this channel, we buy.

We will use buy levels of 1500 or 1000...

The rise in M2 is coming in exactly at the long-term log trend... perfect (chefs kiss, perfect) ...

With SOL, I think we are seeing a repeat of ETH in 2018: a 5-wave fall of 95%. Here is ETH (it rose 46x from the low in 2018 to the high in 2022!) ...

Here is SOL...

This might help you too...

The Exponential Age Plan for 2023

Throughout the year I took shit from all quarters for being incredibly bullish on Exponential Age technology over the coming decade. I’ve been called every name under the sun including being “irresponsible”, a “clueless moron” amongst many others. We started buying as prices were finally hit hard and came into my buy zone, and we haven’t finished yet.

People look at tech investing and think that the chart of ARK means it is dead...

But we have been here before... it’s staggeringly similar to the NDX tech crash in 2001...

What those throwing popcorn from the cheap seats don’t tell you is that this is what happened next...

... or that the five years from 2002 to 2007 saw a 160% rise in the NDX vs the SPX rise of 93%.

Nor do they remember that in 2018 the Fed stopped hiking. They didn’t cut until July 2019 and didn’t stop QT until August 2019. Everyone remembers an instant pivot. It wasn’t. It was a pause, wait and see, and then reverse.

At the moment that the Fed stopped hiking rates, the NDX rose 60% in twelve months. The SPX rose 40%.

When everyone bleats on at me about value stocks, I just look at this chart: value stocks are a fallacy and growth stocks... well, they just grow...

While everyone hates tech, I sit and quietly observe, biding my time, waiting to add.

You see, in my mind there is absolutely zero chance this trade won’t work. I might not yet have the right basket but that will come over time as we learn.

How can I say that I think there is zero chance?

Because we are in the early beginnings of the acceleration/exponential curve of so many technologies – at the same time – that humanity will never have lived through anything like this before. This is like the Greek, the Roman, the Arabic era and the Renaissance, all rolled into one ten- to thirty-year period.

The level of technology innovation squeezed into this period right now is going to generate not only Metcalfe’s Law but Reed’s Law (the sum of exponential curves) effects.

In this bad year for tech, we have already seen a literal quantum jump in AI from OpenAI and Stability AI. Later in the Think Piece there are a couple of articles on this topic, so I won’t say much more now except that the number of downloads of OpenAI’s ChatGPT went from zero to one million in five days. Nothing like this has ever happened before. Literally, the entire internet and information age changed overnight. Stability AI will accelerate this because the technology is open source.

OpenAI will soon release GPT4. This will have five hundred times more parameters than GPT3, which is in itself mind-blowing.

Every single online business on earth will now build with AI.

Also, in a quiet year, Tesla released their pre-beta designs of their humanoid robot, Optimus. Tesla think that they can roll this out for production in five years. WTF!

SpaceX managed to mobilise its Starlink technology to be used in the war in Ukraine and the unrest in Iran. Starlink is essentially rebuilding the internet, eventually potentially free of sovereign interference. Starlink has 3,300 satellites in space, which is 50% of ALL the satellites that exist in space. This has happened in three years. The next Gen2 satellites are now ready for release and SpaceX aims to get 7,000 into space in the next couple of years.

In metaverse world, Oncyber launched a new browser-rendered Web3 metaverse. It only took two years to develop and it has already taken the Web3 world by storm...

Amazon not only released 500,000 robots into their warehouses but launched Amazon Air, the beta test of their drone delivery service...

https://www.theverge.com/2022/12/28/23529705/amazon-drone-delivery-prime-air-california-texas

Oh, and they released their latest robots too...

But to be honest, the tech launches are coming out so rapidly now that it’s impossible to keep up!

This Exponential Age is the single most important thing to have happened for all of us in our lifetimes. We simply cannot ignore it. I will increasingly focus on this to help get everyone across the line. Yes, this might prove to be the eventual demise of humans but we will see fifty years+ of benefits before then and we will live longer and healthier.

Let’s revisit what the key themes are:

The Base Infrastructure Layer:

· Data networks (Starlink, 5G, 6G, etc).

· Computing power (distributed compute, quantum compute, edge compute, etc).

· Processing power (semiconductors).

· Green, cheap electricity.

The Productivity Layer:

· Artificial Intelligence.

· Robotics.

· 3D printing.

· Autonomous vehicles.

The Digital Value Layer:

· Digital assets.

· Digital asset networks.

· Digital ID.

The Human Layer:

· Biotech/longevity.

· Wearable tech.

· VR.

· AR.

· Internet of Things.

· The Metaverse.

· Behavioural economics.

Even looking at this list is sort of scary and awe-inspiring! And this kind of ignores the fact that somewhere in the next ten to twenty years, quantum computing will roll out. Google’s quantum computer, Sycamore, is already 1.5 billion times faster at computing than the world’s fastest supercomputer. QC operates more like nature and DNA than the 1s and 0s of current computers.

Once quantum arrives, everything will again accelerate and the absolute impossible dream becomes easily attainable. Oh, and quantum computing is doubly exponential.

Exponential Compounding – 2, 4, 8, 16, 32, 64, 128, 256, 512, 1024.

Double Exponential Compounding – 2, 8, 32, 128, 512, 2024, 8096, 32,384, 129,536, 518,144.

Ten years: 1024 vs 518,144...

This is what lies ahead.

The 2 standard deviation recession

Currently, almost every single stock or ETF in my EA basket is trying to complete a 2 standard deviation vs log trend correction; a couple have already overshot as they had their S-curve moment where they either die or create Lindy effects from their survival.

Although I don’t have Google in my basket, I probably should considering all the stuff Google X is working on... 2SDs oversold...

AAPL – on its way – a bit more to go. Apple’s AR is going to shock the world in 2024...

Meta is well past its 2SD point and is being tested severely. I think it not only survives the metaverse pivot but thrives. This is its Amazon 2005 to 2007 moment or its 2001 moment...

Tesla – getting there...

Scottish Mortgage Trust... perfect...

Biotech ETF... already kissed the line...

ROBO, Global Robotics ETF... perfect...

Global Semiconductors... yup...

I expect a bit more weakness as Apple and Tesla get to their 2SD levels and then it’s time to add.

We already have the crypto side of this bet covered as it has been at its 2SD line for a while now...

2023 is unlikely to be the acceleration year. It takes time for liquidity to return and investors to gain their confidence back but that doesn’t mean the performance from the low can’t be extremely rewarding in Year 1.

Taking long-term positions in the fastest, most powerful secular themes is how you make the real money.

Don’t lose sight of this.

Good luck for 2023 and beyond!

The Story of GMI

Nineteen years ago, in October 2004 I decided to leave GLG Partners, the hedge fund giant I was working for. I loved working for GLG and they looked after me very well and gave me the freedom to try and run a longer-term macro book. Noam Gottesman, my old boss and founder of GLG, remains a cherished close friend and mentor to this day.

To be honest, my last year at GLG in 2004 was a pretty crappy year for me, I couldn’t find a way to make money, after a great three-year run. Years like 2004 happen from time to time, ones where there is no trend and a long-vol position-taker like me simply can’t get an edge. Nothing in macro world was trending. You may remember the SPX in 2004 was an absolute chop-fest of zero trend...

10-Year Yields were the same...

... and the dollar went nowhere...

Additionally, I had built a decent-sized position in EM (Turkey, India and Russia mainly) but that wasn’t working either...

But the issue wasn’t the lack of ability to make money (as that can happen from time to time), but it was the developments in the hedge fund industry that I didn’t like.

In the 2000s the investor base in hedge funds changed...

The glory years

The hedge fund industry in the ‘80s and ‘90s had been built on investments from family offices, a few Fund of Funds pioneers and a handful of sovereigns like the Kuwait Investment Office. The premise was that the high returns of the hedge funds came from higher volatility position-taking, with longer time horizons or leveraged shorter-term trading strategies. This was the rise of Soros, Tiger, Moore, Tudor and the other macro hedge funds, along with the glory days of prop trading at Goldman. Back in those days, funds had 10% to 15% volatility and a target return of 20% to 30% annualised returns. The best funds had several years of 50%+ returns. Those were the glory days.

The risk/adjusted returns of hedge funds, while pulled down a little by the 1998 LTCM debacle, had outperformed the market overall and that led to a HUGE influx of capital from new players – the pension funds and insurance companies – and a much broader pool of Fund of Funds (whose clients were the same pension funds, insurance companies and the new sovereign wealth funds).

The death of the macro returns

These new investors in the market were not after the same return streams as the Family Offices, who wanted large absolute returns, they instead wanted a return stream that look more like their liabilities (i.e., fixed income-style returns) and they forced the industry to accept their vast capital, but with a focus on lower volatility.

Lower volatility is all well and good but it comes at a price: lower returns. This influx of new capital wanted monthly returns that moved less than 2%. This led to a focus on shorter-term return streams and monthly reporting (as opposed to quarterly). A hedge fund’s job was now not to produce the highest returns possible but the highest returns within a monthly variance of 1% to 2%. Although this was a very different game, the industry largely adapted.

The rise of the risk manager asset gatherers

The hedge fund industry has always been a game of money. It is an immensely stressful job, as many of you know, for which you understandably want to be rewarded. Generally, longevity in the industry is rare so you are incentivised to make as much as you can while you are able. In the ‘80s and ‘90s, that was all about risk taking, but in the 2000s it became all about managing the monthly numbers and accepting lower returns.

The stark reality is that the new, big-money investors wanted 6% to 8% annualised volatility and would accept 6% to 10% returns. This was very different from the 15% volatility and 30% target returns of old.

Thus, the only way to make money was to create a monstrously large fund in terms of assets, to allow the management fee to be the primary driver of income for the hedge funds, and the only way to do this was via large multi-strategy funds that had massive diversification to dampen volatility and lower returns.

In short, it became an asset-gathering game and not a returns game. The job had shifted from making money for investors to not losing money and creating an income stream that resembled high-yield bonds (but with less risk).

This was the death of the star hedge fund manager and the rise of the star risk manager. The leaders in the industry switched from position-takers like George Soros and Julian Robertson to risk managers like Ken Griffin (Citadel) and Izzy Englander (Millennium).

Thus, hedge fund firms had to adapt or die if they wanted to thrive, and the job of being a portfolio manager became less attractive than being an owner of a giant hedge fund as returns came down.

Old-school macro made no sense anymore

I could see this back in 2003 and 2004 as these new investors piled into the market. I knew that it made no sense to manage your monthly P&L versus the overall total returns on your positions but that had become the game.

In essence, what this meant was that you were forced to trade a four-week view and every month you’d kind of reset your positions based on that so you could report to your investors that you hadn’t had a P&L variance of more than 2%. Instead of buying something and getting a 50% return over two years (while accepting periodic large drawdowns in that position) you had to manage your monthly drawdown in that position instead. Thus, if you made 10% in a position one month but it fell 5% the month after, you were basically forced to cut it. That was dumb.

By the early 2000s, trade time horizons were crushed and it was near impossible for macro investing to generate returns. Macro investing is all about capturing the asset price moves from changes in the business cycle and these take six months to eighteen months to play out.

As Paul Tudor Jones once said to me, “The best investors are those whose idea time horizon matches their investment time horizon”. The reality is that the industry had created a time horizon of one month and that made no sense for global macro strategies.

Macro traders like PTJ or Louis Bacon were able to survive in this environment as they were gifted enough to trade short-term time horizons to match their investors’ needs. But other firms just closed down (Tiger), or many went on to manage their own money (Nick Roditi) or managed capital where they had less constraints (Stan Druckenmiller left Soros Fund Management and managed Duquesne full-time).

Eventually, most of the legends closed their hedge funds and turned them into family offices to trade their own capital, free of the restrictions enforced by external investors (and obviously, their returns rose significantly!).

Time to get out

If I have a super-power, it is in spotting long-term trends and, when I saw this emerging trend of the death of hedge fund returns in 2003 and 2004, I knew the glory days of macro were over even though we had all enjoyed an amazing run in the preceding years. I understood my weaknesses and knew I was not a short-term trader as all my ideas were based on macro trends. I loved the macro hedge fund industry, but I recognised it was over for me.

I was also exhausted and burnt out from the whirlwind of six crazy years – from the back-to-back chaos but great, macro environment of the Asian crisis (and LTCM crisis), the Dotcom bust, the 2001/2 recession and 9/11.

I had made some decent money over the years both as a salesman and later as a hedge fund manager but at 36 years old I needed some kind of work to bring in an income and not erode my capital. I hadn’t really made enough to take the bet on living off my capital alone. I understood that income remains the most important asset you can have. But what to do?

Raoul – the writer/analyst

In 2003, Morgan Stanley invited me on a trip to China. At the time China was the big, exciting new market and everyone was wildly bullish. I went to China bullish and came back super bearish when I realised that most of the money being spent on the gleaming new cities, roads, bridges, railways etc., was wasted. It was all empty and unused. It was a massive destruction of capital. On the way back from China, I wrote up the trip notes in a piece called, “There’s Something Wrong in Paradise” and, on my return to the office, sent it to some industry friends.

It went viral...

Back in my Goldman days I had also become somewhat well-known for writing for clients on Bloomberg and for macro analysis on the GS chat system. After the China article was so well received, I knew I could write and that people valued my thoughts, and also realised I was a deeply independent thinker.

At GLG I subscribed to two main macro research services – Drobny Advisors (Steve and Andres Drobny) and DSG Asia (Simon Ogus). I reached out to both for advice, and both selflessly encouraged me to start my own high-end research service.

By the end of 2004 I had fifteen years’ business experience, the majority based around the hedge fund industry. I was not merely an observer but someone at the very centre. I knew how people thought, how they spoke, what information they valued and what pressures they were under. Almost no one else in the research business back then had that kind of experience; most came from research functions at banks, and not out of a hedge fund. I had an edge.

I also knew that the focus on short-term returns was going to produce suboptimal returns going forward and I wanted to prove that longer-term macro investing would produce far superior total returns over time, even with the larger volatility.

Time to do it

So, I decided to hand in my resignation, up my quality of life by moving to the Mediterranean coast of Spain (which had the added benefit of allowing me to filter out the noise of brokers, salespeople, research departments etc., to help me think independently) and start Global Macro Investor.

I talked through my plans with Noam, and he very kindly agreed to support me and has remained a GMI subscriber for the entire eighteen years (of which I am eternally honoured, grateful and proud). Goldman also backed me and have continued to subscribe in various guises since day one.

Beyond that, I had no idea if there would be any demand for Global Macro Investor but GLG and GS had de-risked it for me so I went ahead.

The very first GMI was thirty pages long. I sent it out to everyone I knew for free, received good feedback and continued writing. In March 2005 after the first three months, I had to start charging for the service and closed the free circulation.

It was nail-biting waiting to see if anyone would sign up. Then faxes (!!) started to arrive from people I didn’t even know, to subscribe for Euro 30,000 per annum ($50,000). I was blown away!

The first GMI subscribers were all hedge funds and bank prop desks and the number of subscribers continued to grow slowly by word of mouth.

The results

At the end of 2005, I had my first set of annual results and 71% of my trades were profitable and overall the returns were probably around 35% to 40% (for legal reasons I don’t produce a full set of portfolio returns). People took notice and some of the world’s most famous hedge fund investors joined GMI (and several of them are still with me seventeen years later).

In 2006 I had another good year with an approximate 30% return with 63% winners vs losers. In January 2007 when I reviewed the year in the annual Think Piece I wrote the following, which remains true to this day:

All in all, 2006 was another great year for GMI. As is my objective, I think I should have justified the subscription fee a few times over. Don’t expect it every year, but I am basically hoping to prove that a longer-term view, combined with in-depth research and some technical timing, will generate outsized returns.

There are so few participants in the medium to longer-term time horizon that gains are easier to come by than in the shorter term. This is a point I try to raise almost every month.

Then came 2007 and everything changed...

In 2007 I made an estimated over 100% (!!) in my recommended trade portfolio. Most of my gains that year were in being long equities in the first half and short equities as the business cycle turned more concerning. I had begun positioning for the recession that I had been forecasting for the entire year.

I also gave some advice to my hedge fund clients and bank prop traders:

The low volatility, tight stops and small number of positions that investors seek now, can only mean one thing for managers – lower returns. It’s basic investment management maths. You have to run huge amounts of money to earn anything in that framework as it’s all about the management fee.

Once you manage to diversify away from G7 fixed income and FX, use less leverage, give up using stops and concentrate on the asset classes, trades and time horizons that others don’t venture much into, then I think you’ll find it easier to make more money consistently (although you will have to accept slightly higher volatility).

If you could ask for one wish this New Year it should be for your fund or book to be given the ability to broaden its horizons. Beg your investors or beg your boss.

2008 was the year that really made my reputation as I had forecast the recession (which very few had done) and the financial crisis and managed to produce another year of something like over 100% returns. But I knew then what I say today – when you think your shit smells of roses, you are about to get your face rubbed in it!

New subscribers piled into GMI, wanting to get access to the crazy returns, ignoring the fact that I always said things would be volatile and I that I would not always be right.

I recognised that it was unlikely that I could continue to produce a string of returns as I had done in my first four years of GMI without soon taking a big loss. Here is what I wrote back in January 2009:

There are no investment heroes. Everyone does the best they can. I have had four years of very, very good returns but probability says that I will have a nasty year at some point. It could be this year or it may not be. We can’t tell in advance. All I aim to prove is that consistently, over time, using a decent framework, doing my homework and sticking to a longer-term time horizon, accepting some patches of tricky volatility and not using stop losses, that I will add value and generate decently positive returns. “Over time” is the key phrase. That is not necessarily year in and year out... although that would be nice.

Then 2009 came and my forecast came true. I had the worst year of my career, having made the fatal mistake of overriding my business cycle framework with emotion. I was far too bearish and got KILLED. I think 2009 was down around 50%. Urgh. Subscribers who didn’t understand the long-term game began to drift away. I knew this was coming.

But overall, one down year in five should be considered normal (as was a down, roughly 50% year after a string of +30% to +100% years).

In 2010 I eked out an up-year and started to regain my confidence and in 2011 had a decent year in a tough market. 2012 was a small down year, 2013 was flat and 2014 was very good (probably 40%+). All in all, my first ten years were very good overall with some exceptional years, a few years of chop and a strong finish. Macro markets were really tricky for several years.

In the first ten years, my winning vs losing trades were still around 60% with eight out of ten positive years, which is very good indeed. In 2014 I had also begun to focus on much longer-term themes such as Monsoon and bitcoin, which have continued to evolve as a strategy for me as I think capture even higher returns over time.

The GMI subscriber base had also begun to change significantly: the bank prop desk no longer existed, many macro funds had closed down and multi-strat funds were on the rise. There was a big increase in family offices who soon became the core subscribers of GMI. Family Offices have longer-term time horizons, can take more risk but also must focus on the business cycle to manage those risks. My extended time horizon with the ability to capture big medium-term trades suited them better than most. A lot of well-known hedge fund managers continued to subscribe to GMI but tended to begin to use it more for their personal investments, which were longer-term as opposed to for their funds. Fascinating...

The next decade of GMI began with a great year in 2015 with a big bet on the dollar and bitcoin, and probably produced 20%+ returns overall, 2016 was a small down year (my third in twelve years) and 2017 was another good year with 720% gains in bitcoin and 40% gains in India!

2018 was another exceptional year with a massive bet on bonds coming right at the end of the year (Buy Bonds, Wear Diamonds) while 2019 was flat (I made a lot in bonds and gave back a lot in equities).

Then came 2020 which was my best performance in GMI’s history, coming in at a guestimate of well over 200%! Bonds and crypto ruled the year, along with closing my short equities and credit just at the right time by sticking to my framework.

2021 produced also ridiculously stunning returns at something like over 200% again, this time produced via crypto again as well as carbon.

Over the period of 2019 and 2022, the client base of GMI expanded further into a different type of investor – the high-net-worth investor who is managing their own portfolio.

Family offices and HNW investors have similar risk profiles and time horizons, but the latter have had less experience with financial instruments and risk management so have a steep learning curve to climb.

On looking at performance overall, 2022 has been the second-worst year for GMI, but the main losses have come from giving back a chunk of the gains in the long-term crypto trades, which is expected, but they are still on the whole well up from purchase. I have been clear that although I have a few bets in the long-term book, ETH is by far the biggest for me. I’ve also been trying to use this bear market to build a position in Exponential Age stocks. Generally, I have traded little this year as I didn’t see the big setup. A fuller breakdown of how I thought in 2022 can be found at the beginning of the January 2022 Think Piece.

Overall, I tend not to think of the year-to-year machinations of returns in the long-term portfolio, but mainly when I close out the trade. No one enjoys volatility but it’s part of being a long-term investor. In the past from 2013 to 2017 I held bitcoin through a 500% rise and an 85% fall, eventually closing it out for a 1000% gain.

GMI has had four down years in eighteen, a few flat ones and a lot of incredible years. My winners vs losers still remain above 60%, which amazes me.

I am incredibly proud of what I have achieved at GMI over the years. It has been one hell of a ride. I never thought that my views on time horizon and secular trends would be proven to such an extent.

I am sure I have – without question – the best performance of any research service in history, but it has not been a straight line for sure! And there is never an opportunity to rest on any laurels.

But what I am truly most proud of is the community we have built around GMI. You guys and girls are simply magical. Whenever we get together at the GMI Round Table, magic happens. We have had some amazing ideas, made some great friends, had a lot of laughs, a lot of wine, been to some gorgeous places (Javea (Spain), Cayman, Miami and Napa Valley) and we have found all sorts of opportunities to invest in together. Many of you have been with GMI for a decade or longer and that is incredible and humbling. And I am privileged that so many of you have become life-long close friends.

GMI members are extraordinary and diverse. You even seed-funded Real Vision, which has gone on to become a financial media powerhouse and an incredible community in its own right, and many of you are investors in EXPAAM, my digital asset fund of funds which has just started its journey and I think will best capture the opportunity over time in digital assets. GMI members also partially seed-funded ScienceMagic.Studios, the business I co-founded that aims to tokenise the world’s largest cultural communities, which has also just commenced its journey. Also, if it wasn’t for Emil Woods, a very long-standing GMI member and good friend of nearly two decades, I’d have never discovered bitcoin or crypto back in 2013. I owe so much to you all.

But it is not just about coinvesting in my projects. Many of you have built remarkable new businesses from ideas and connections that came from GMI Round Tables, such as Mark Yusko’s Morgan Creek Digital or Dan Tapiero’s 10T. You guys have invested in each other in so many ventures I can’t count, from digital assets to hedge funds, from real estate to gold mines, from direct lending to startups.

Nothing exists like GMI. It remains the lynchpin of everything I do and how I think about the world, and things I love doing together with you all!

Thank you for everything. You bought the ticket; you took the ride. Let’s see where the journey takes us next...

One thing I do know, there are big opportunities out there for longer-term investors.

Generative AI By David Mattin

(New World Same Humans)

Introduction

You’ve heard the news. You’ve seen the images. You’ve witnessed the maelstrom of excitement on Twitter...

... that means you already know that right now, many believe something special is happening when it comes to AI.

That claim is being fuelled by passages of text like this:

Or images like this:

And responses like this:

What’s going on? And why should you care?

It’s always legitimate to be wary of tech hype. But in this essay I want to persuade you that this is a transformative moment. We already knew AI was set to be one of the defining technologies of the coming decade. But right now we’re witnessing the emergence of a whole new kind of AI: generative AI. And it’s doing fundamentally new things; things we didn’t expect – certainly not this soon, and maybe not ever.

I want to persuade you that the implications are vast. Industries will be reconfigured. Professions will be transformed and new ones will emerge. Entire domains of productivity will be supercharged.

The early signs are that investors agree. PitchBook says venture funding around generative AI has increased by 425% since 2020 to $2.1 billion this year.

But numbers like that paint only a fraction of the picture here. This gets way deeper. I want to dive into what’s happening, and what it all means for our shared future.

At the heart of my argument is a single idea: via generative AI, we’re about to live through an amplification of human creativity on a scale never before seen. It’s a moment comparable to the invention of the Gutenberg press in its ability to reshape the economy, culture, and the texture of everyday life.

That means some big, scary, and downright weird questions lie ahead, around our relationship with knowledge, the nature of art and culture, and even what it means to be human. Generative AI is going to touch the lives of billions of people – and cause them to start asking these questions, too.

These are bold claims. And sure, no one has a crystal ball. But as 2023 dawns, it seems that we’re at the start of something revolutionary.

In what follows, I’ll show you what I mean. We’ll go on a tour that ranges from transformative writing tools to the world’s most downloaded app this Christmas, to AI-fuelled popstars.

Strap in; it gets pretty wild.

A brief history of now

To understand what’s happening now, we need perspective.

Join me, then, on an extremely brief recent history of AI; one that will unpack what is special about this moment.

More than meets the eye

Back in 1997, another AI was making headlines. The IBM supercomputer Deep Blue had just beaten world champion Gary Kasparov at chess.

Put simply, this is how AIs such as Deep Blue worked: take a highly structured domain of activity (chess!) and manually teach an AI the rules of that domain. The performance of the AI then depends on sheer calculative power. Deep Blue won because it could analyse 200 million moves per second; it employed what AI scientists called brute force.

We’ve come a long way since then. Today, instead of having to teach AIs manually about the rules of a given domain, neural networks leverage multiple connected algorithms to dive into the data and discern the rules for themselves. These machine learning methods make for far more flexible, and deeper, pattern-spotters. It means the kind of AI that can learn to diagnose cancer from MRI scans or navigate a car through traffic.

The wave of generative AI tools causing such excitement now are built on neural networks. But a revolutionary new kind: transformer models.

Transformer models use a new technique called self-attention that gives them superpowers when it comes to handling sequential data (that’s any data where the order of the elements matters; think strands of DNA, or the words in a sentence). Thanks to special data tagging techniques, transformer models can enrich their examination of each element of data with relevant context provided by the rest of the dataset. This allows them to build sophisticated models of the underlying relationships that exist inside the information.

It may sound an obscure technical shift. But the resulting differences are profound.

Before, we had to train neural networks by exposing them to large amounts of data labelled by hand. Creating those labelled data sets was slow and expensive.

Transformer models dispense with all that. Instead, we can let them loose on unlabelled data in the wild and they’ll teach themselves to recognise the deep underlying relationships that structure that information. It means we can train these models on ABSURDLY MASSIVE datasets. And that they’re insanely flexible, deep learners, able to recognise relationships that are nuanced to the nth degree.

The result is AIs capable of doing things that, just a few years ago, we hardly dared dream about.

The collision with creativity

So far, so technical. It’s the implications of all this that really matter.

When it comes to understanding those implications, a simple framing thought can help.

The engine that drives so much of the human story is a collision between ever-changing technology and unchanging human needs. When an emerging technology unlocks a new way to serve a fundamental human need – think convenience, security, status, and many more – that’s when new behaviours and mindsets arise at scale.

This framework helps us zero in on what is happening now.

In November 2020, Google’s DeepMind announced that they’d used a transformer model to help solve the iconic protein folding problem, which centres on prediction of the structure of proteins.

It was a huge achievement, with the potential to unlock advances across the life sciences.

But we always believed that AIs would impact scientific and technical domains. An AI-fuelled solution to the protein folding problem may have come sooner than we thought it would. But it was still aligned with the general thrust of our expectations.

Don’t get me wrong: generative AIs impact on our eternal quest for knowledge, and technical mastery looks set to be immense, and that really matters. It’s a truth we’ll explore in this essay.

But look at the outputs I shared in the introduction. They show AI colliding with an entirely different set of fundamental human impulses. The impulse to tell stories. To make beautiful images. To create culture.

What we’re seeing now is the collision between AI and creativity. And that’s something we simply didn’t expect.

Back in 2013, academics at Oxford University predicted the jobs most likely to be disrupted by AI (https://www.oxfordmartin.ox.ac.uk/downloads/academic/The_Future_of_Employment.pdf). At the top of the list were routine tasks such as telemarketing and brokerage clerking. At the bottom? Creative professions such as writer and illustrator.

Sure, we told ourselves, AIs are surpassing us when it comes to mathematical and technical challenges. But creativity? That’s ours. That’s what we’ll have left when the machines take over. The story of generative AI across the last 24 months has exploded that notion. And we’re still scrabbling to understand what that means.

It’s this, above all, that is fuelling the excitement. And the fear.

And while the last year has seen a rollercoaster for generative AI innovation, the journey is only just getting started.

So, let’s dive deeper into where we’re at now, and where all this is heading.

The magic language box

The revolutionary moment we’re approaching is fuelled primarily by a specific kind of transformer. That is, the large language model (LLM).

Get a grip on what LLMs are doing and what’s new about it, and you’re a long way towards understanding the scope of all this.

And when it comes to the wave of innovation we’re now inside, the rollercoaster really began in 2020 with the release of a particular LLM: OpenAI’s GPT-3.

GPT-3 is an LLM trained on a massive corpus of text – an appreciable amount of the text ever uploaded to the internet. All of the English-language Wikipedia, comprised of more than six million articles, makes up around 0.6% of its training data. That process has produced a model with an incredibly detailed picture of the underlying relationships that weave words together; the relationships that constitute what we humans call meaning.

Essentially, GPT-3 is trained to take a text input and use complex statistical techniques to predict what the next few lines of text should be. Type something in; get something back. Get back, that is, a novel text output – something that didn’t exist until that moment.

When OpenAI made GPT-3 available to selected users in June 2020, the astonishment was loud and universal. The model was a quantum leap advance on its predecessor, GPT-2 (more on the coming GPT-4 later). Here for the first time was a tool that could generate prose with human-level fluency and the appearance of true understanding.

It was as though a magic language box had just fallen to Earth.

The penny dropped for me, as for millions of others, when the renowned AI artist Mario Klingemann shared a short story written by the tool. Klingemann asked GPT-3 to write a story about Twitter in the style of the revered 19th-century English humourist Jerome K Jerome. Here’s the first paragraph:

It made perfect sense. It even sounded just like Jerome. Meanwhile, the examples poured in. The frog poem you read at the beginning of this essay? Written by GPT-3.

So wait, now we can create pages of convincing literary pastiche, poems, essays, and more just by typing a simple text prompt into a box?

Oh shit. What the hell is happening?

Today, GPT-3 and similar LLMs are fuelling a growing array of startups and inventions. The playing field is already massive, and we can only catch a glimpse here.

Look, for example, at Keeper Tax, which leverages GPT-3’s processing and summarisation abilities to help automate accounts and record-keeping (https://gpt3demo.com/apps/keepertax). Or Tabulate, which uses GPT-3 to auto-generate useful spreadsheets (https://gpt3demo.com/apps/excel).

The potential for LLMs to automate away a whole ton of time-consuming tasks – and to transform professions from accountancy to data science – is huge.

Another domain set to be transformed? Online retail, worth over $5.2 trillion a year according to Statista. Get ready for a new wave of conversational chatbots with a difference: this time, they’ll actually work. Virtual assistants fuelled by GPT-3 and other LLMs will be able to conduct fluent and useful conversations with customers, making for a transformed online shopping experience.

Many standout innovations, though, are taking aim at creative and intellectual domains.

Look a Jasper, a generative AI tool intended to supercharge marketing professionals. Just describe what you want in a few simple sentences, and Jasper will generate a complete blog post, LinkedIn article, Twitter thread, or YouTube video script that fits the bill:

Writing is typically a slow, painstaking process. Jasper can push out a complete Facebook ad or blog post in seconds. Sure, it will need a little editing. But the possible productivity gains are insane.

The startup says 80,000 marketers at Airbnb, IBM, HarperCollins and beyond are already using their platform.

According to Statista, content marketing is a $76 billion a year industry. And in October this happened:

Want more?

Students are using GPT-3 to write their essays for them. Head teachers at leading schools in the UK are already sounding the alarm – and generating headlines like this:

We’re losing the ability to distinguish between an essay written by a student and one written by an LLM. That’s the entire education industrial complex reconfigured, then.

Meanwhile, Google recently showcased Wordcraft, an LLM tool intended to supercharge novelists (https://wordcraft-writers-workshop.appspot.com/).

Literally as I was writing this essay DeepMind published a paper on a new tool called Dramatron, which they say generates long and coherent storyline and dialogue ideas for dramatists (https://arxiv.org/abs/2209.14958).

This Christmas’s hit movie was Glass Onion, an intricately plotted murder mystery. We’re heading towards a world in which a generative AI will be able to generate a script for the sequel, complete with compelling plot twists and deadpan one-liners for Daniel Craig.

I could go on (and on). But there’s so much more to look at. First among them, the way LLMs are set to transform our relationship with the internet.

Tapping the hivemind

In November, OpenAI launched the latest version of GPT-3: ChatGPT. It’s powered by the most powerful model yet, which outputs even higher quality text. And it’s optimised for back-and-forth dialogue with the human user.

Because ChatGPT is even more powerful and easier to use than previous iterations, it spurred a whole new wave of excitement. OpenAI said the tool crossed 1 million users in just five days, as people rushed in to ask ChatGPT to do everything from writing limericks to answering technical questions about lines of code.

All this makes clear another revolution taking shape before our eyes: generative AI as a whole new kind of search.

Millions are now stepping to ChatGPT with the kind of enquiries they’d once have brought to the Google search bar. Who was Thomas Cromwell? Explain the second law of thermodynamics. What is the meaning of life?

And yes, all this has done much to expose the current limits of LLMs.

ChatGPT-3 is liable to spit out persuasive-sounding but factually inaccurate answers. It can get basic geography and history wrong. It even struggles with simple arithmetic. As much as it might seem otherwise, large language models don’t understand what they’re saying. They don’t have common sense. What’s more, because they’re trained on data written by humans, they tend to reproduce the biases and prejudices that are sadly common among us. One user asked ChatGPT to describe a good coder; ‘white’ and ‘male’ were attributes included in its answer.

But over time, these problems will be ameliorated by further fine tuning and other techniques. There can be little doubt: a search earthquake is coming.

Google built a $1 trillion business on a new way to access human knowledge. As 2023 dawns, we’re only at beginning of understanding what these large language models really are. One way to understand them? As a radical new way to tap into our shared cultural and intellectual heritage – to tap, that is, the human hivemind – and produce new outputs that are founded in it.

What this means is new kind of relationship with the internet; a new relationship, really, with knowledge itself. The arrival of LLMs means the arrival of a revolutionary tool for thought that will supercharge science, philosophy, social research, and more. And allow for the emergence of new text-based cultural forms: weird, hybrid forms that are part essay, part game, part infinite imagined world.

And, surely, it means the birth of new trillion-dollar empires.

Feels like a lot to take in?

Then take a deep breath, because we’re about to look at what happens when you tie LLMs to other kinds of tools.

This is where things get really wild.

Because in 2022, via this combination of LLMs and other AI models, we were fast-forwarded into a strange new future. Finally, we’re zeroing in on the Gutenberg moment I’ve been promising.

A Gutenberg moment

Large language models are a revolution all of their own. But we can also hook them up to other kinds of neural networks, capable of generating non-text outputs.

In 2022, that truth came to life. And the result was a dizzying hall of mirrors.

Enter the labyrinth

Last year the generative AI space caught fire. The fuel? Text-to-image models.

These tools typically hook up a language model that can understand a text prompt to a kind of neural network called a diffusion model, capable of generating images that satisfy the prompt.

OpenAI’s text to image generator DALL-E, released early in 2021, offered a glimpse of the potential. But it was with this year’s release of DALL·E 2 and other major text-to-image generators – especially Midjourney and Stable Diffusion – that changed everything.

DALL·E 2 was announced in April. Look at its response, generated in seconds, to this simple text prompt:

A photo of a teddy bear on a skateboard in Times Square... it’s become an icon of the generative AI wave:

By October DALL·E 2 was generating over 2 million images per day.

Stable Diffusion was released in August. It’s a model trained on 2.3 billion image-text pairs. Unlike DALL·E 2, which is accessed via the cloud, Stable Diffusion is open source; anyone can download the model and run it from their own relatively modest computer. Just three months after the August release came version 2.0, which made possible a four-fold increase in image resolution.

Yes, a 4x improvement in image quality in three months. We’re now approaching insane levels of photorealism:

This is not a photo: insane!

More than 10 million people have poured into Stable Diffusion to create incredible images in seconds via simple text instructions. Welcome to a world of mad art mashups and impossible portraits. Bob Dylan as painted by Vincent Van Gogh, anyone?

In October the parent company, Stability AI, took $101 million in funding at a valuation north of $1 billion.

Is this all happening fast enough for you? It didn’t stop with text to image...

In October, both Meta and Alphabet showcased text to video tools. With these, we’re seeing the near-instant generation of five-second animated video clips simply by typing a one-sentence description into a box:

Seriously, go and watch some (https://imagen.research.google/video/).

Alphabet also offered a glimpse of another tool, Phenaki, that uses more detailed prompts to generate longer clips.

Minutes of video, simply by typing a one-line description? I mean, are Disney seeing this?!

Beauty as a service

Right now we’re talking about images and short videos. Eventually, we’ll get to full-length movies.

The ability to create beautiful images or animations has always been the preserve of a professional cadre of artists, designers, and illustrators. These tools have blown that old world out of the water. Boom: gone. In this new world, creating a beautiful image is now as quick and easy as ordering new washing powder on Amazon, and video will be next.

Industries grounded in arts and visual representations are set to be transformed. Think magazine illustration, architecture, industrial design, and many more.

Look at the Economist using another text to image tool, Midjourney, to design its front cover illustration this summer. Last year(!) that would have needed a skilled professional; this year it took a one-line text prompt.

Or check out Lensa, a generative AI app that creates illustrated profile pictures based on a user photo:

This December, it became the world’s most downloaded app:

Beautiful illustrations have gone from prized rarity to commodity. Animated shorts are going the same way. This is all being achieved with tools that barely existed twelve months ago. It’s taken one year to reconfigure the future of a vast range of creative professions.

If anyone claims to understand all the implications of this, don’t believe them. Huge questions abound.

What does this do to the careers of millions of illustrators and designers?

Do artists whose work is used to train these models deserve to be paid for their contribution?

What about the generation of pornography and other toxic material? DALL·E 2, Stable Diffusion, et al have guard rails around them to prevent obvious misuse, but no guard rails are ever perfect.

We’re going to have to feel our way towards answers. But even this is just the start.

And to see where all this is ultimately heading, it pays to listen to one of the key players of the generative AI movement.

Text to anything

Where does the hall of mirrors end?

In answering this question, we arrive at the motherload that I’ve been driving at all this time. The Gutenberg moment I promised in the introduction.

The founder of Stability AI – parent company to Stable Diffusion – is a British hedge fund manager turned technologist called Emad Mostaque. And he has plans that extend way beyond even the amazing text to image tool that has made his startup the hottest on the planet.

Mostaque says he’s working the music industry to train a massive text-to-music model. Want Motown soul crossed with 12th-century Gregorian chant? A heavy metal Ode to Joy? Soon, all you’ll have to do is type the description. Mostaque calls this tool ‘a generative Spotify’.

Even that, though, is far from all. Soon, says Mostaque, we’ll see the blossoming of thousands of models trained to generate all kinds of creative output.

Want to create a TED-style presentation on the future of self-driving cars? A design for a new landmark skyscraper in Dubai? A script for a children’s movie about cute talking frogs? Just type the prompt and hit return.

That’s the head-spinning future that we are now hurtling towards. Right now, we have text to image and text to video. Coming soon? Text to anything.

Text to anything is the ‘where’ this ends. And it’s hard to overstate the revolutionary implications. What does that do to the economy? To human consciousness?

As Mostaque says:

“This is a society-wide shift... it’s a true exponential change. When you look at AI research papers, it’s doubling every 24 months. And like I said, exponentials are a hell of a thing. We are not equipped to handle it.”

No one can fathom the consequences of all this just yet. But a few are becoming clear...

A massive amplification of human creativity is coming. Billions will be equipped with tools for creation the like of which we’ve never seen. As 2023 dawns, we stand at the eve of a Cambrian explosion of creative and intellectual output.

And yes, it’s a moment comparable to the arrival of the Gutenberg printing press in the 15th century. In fact, now we’ve arrived at the heart of my argument I’d venture even further...

We may come to believe that the arrival of these tools is comparable even to the invention of writing itself in their power to transform human creative exchange and modes of consciousness. Because that, it seems to me, is what these tools will do. They’ll change the very way we think.

I know that feels crazy. But project these AI tools 20, 30, 50 years into the future. Imagine a time when people can’t believe you couldn’tgenerate an accomplished picture, book, script, or design simply by hitting a button.

It’s just huge. And it’s coming.

A new golden age

There are some who greet all this with dismay.

The rise of generative AI tools such as GPT-3 and Stable Diffusion will mean, they say, the devaluation of human creativity. A bleak new future in which we hand over art, beauty, and ingenuity to machines and live in a world of automated blandness.

In fact, it’s more likely that the opposite is true. We’re approaching a new golden age for the arts, culture, design, and more.

Sure, creative outputs will be commoditised. We’ll have far more of everything – images, stories, designs – than we can possibly consume. The result? The bar for creative excellence will be dramatically raised. What we call a stunning image today will be ordinary tomorrow.

And that won’t mean the end for human creativity. Quite the opposite. Only the most creative people, who are deeply skilled in whispering to generative models, will be able to create art, designs and more that stand out amid all the noise.

So much about the future that generative AI will shape is uncertain.

But one thing we can bet on? The eternal human impulse towards creativity is going nowhere. We’ll always prize exceptional cultural artefacts created by living, breathing people. We’ll always revere the people who make them. And much of the best work will be created by people working in collaboration with AI.

Human creativity isn’t being banished. If you take one thing from this essay, take this: creativity is about to be more important, useful, and profitable than ever.

WTF next?

If you’re persuaded that this is a transformational moment, then one question naturally follows.

WTF does all this head to next?

It’s expected that GPT-4 will arrive some time in 2023; many are anticipating another quantum leap in capability.

GPT-3 is a LLM with 175 billion parameters; roughly speaking, that means it has developed a framework that contains 175 billion different rules about how the elements of language relate to one another. Parameter size is far from the only factor that governs output quality. But so far, the two have shown a close positive correlation.

As you read in a previous instalment of GMI: we’ve seen an 8,000x increase in parameter size in four years!

That’s how fast this is evolving. With GPT-4 then, expect a model able to retain narrative coherence across long – even book-length – works of prose, cope with complex step-by-step reasoning, and integrate real-world context to ensure factual accuracy. If university professors think the student essay is dead now, they’re about to get a whole new shock.

Meanwhile, Emad Mostaque reckons we’re within five years of photorealistic, movie-length animated films generated entirely by AI.

And Stability AI are reportedly working on techniques that could increase image generation time by up to 256 times. That will mean real-time generation; images that appear pretty much as you type.

But the most powerful use cases? They’re often about generative AI smashing into other emerging technologies.

To close, I want to offer a glimpse of three powerful convergences happening now. Three questions, really, to be explored more fully some time in the future.

New worlds

The last twenty-four months have also seen a ton of hype around the metaverse.

Don’t be fooled by the backlash currently underway. Sure, there are good reasons to wonder if Zuckerberg’s Meta can get VR technology to market quickly enough. But the underlying principle here – immersive virtual worlds in which billions gather to play, socialise, work, transact and more – is powerful:

Multiplayer video game Fortnite is now an immersive virtual concert venue.

Right now, building such worlds, and creating digital objects inside them, takes teams of skilled coders and designers.

I think you know where this is heading.

Soon we’ll be able to generate entire virtual worlds via simple natural language descriptions. Text to worlds.

How does our relationship with reality change when we’re able to generate new, immersive, compelling realities simply by describing them?

Generated humans

Virtual humans are sophisticated digital avatars that look and sound like real people. And they’re coming for you.

Look at Samsung’s NEONs: a project to create a range of ‘artificial humans’. Samsung want NEONs to be in-store customer service assistants, doctor’s receptionists, airport greeters, and more.

Samsung’s Neon virtual humans

Or look at the growing band of virtual influencers and popstars. Think Lil Miquela, with 2.9 million followers on Instagram and a host of brand sponsorships; she reportedly earned her creators £9 million last year.

Lil Miquela is a leading virtual influencer

Right now, these kinds of virtual humans are little more than convincing digital shells voiced by real people.

But soon, GPT-4 will do the talking. Virtual humans will spring into a whole new kind of vivid life. We’ll have convincing and responsive AI-generated actors, popstars, models and more, all fuelled by LLMs.

What’s more, billions of people will soon have the ability to imagine and instantly generate their own virtual human and talk to it endlessly.

What happens to the entertainment industry in a world of compelling, utterly convincing virtual humans?

Virtual companions

Where does the convergence of generative AI, the metaverse, and virtual humans lead?

For years I’ve been writing about the emergence of virtual companions: AI-fuelled entities that become an assistant, counsellor, and even friend to the user.

They already exist. See Replika, an AI chatbot intended to become a companion and guide. It’s seen over 10 million downloads, and use spiked during lockdowns.

Replika is an AI-fuelled virtual companion

Replika is okay but limited.

But now, via LLMs, we’ll see the rise of virtual companions that can conduct human-level conversation full of insight and nuance. They’ll exist inside photorealistic virtual humans that look and sound like just real people.

Just imagine: an assistant who knows your schedule, a counsellor who knows your deepest hopes and dreams, and a philosopher with access to all the world’s knowledge. In your pocket 24/7; always ready to talk.

It’s sixteen years this year since the launch of the iPhone.

Are LLM-fuelled virtual companions the next transformative innovation, set to change the texture of life for billions around the world?

Our place in the world

It’s been a whirlwind tour. And the truth is, we’ve barely scratched the surface.

As we’ve seen with my three closing challenges, at the outer edges of all this the questions get philosophical.

How should we understand what is happening with generative AI and with the broader rise of machine intelligence?

We can only begin to answer that question when we zoom way out and take the long view.

For 100,000 years we humans have been unequivocally the most intelligent collective on planet. We’re the only creature on Earth with higher, symbol-manipulating cognitive powers. Now, that is changing. And the effect is something akin to being invaded by aliens.

Our understanding of human consciousness is limited. Still, we know these AIs are not (yet) conscious in any commonly understood sense of the term.

But they do have a strange new way of seeing the world; one all of their own. And across the years ahead, they are going to impose their form of intelligence on us. In the name of efficiency, technical mastery, and profit, ever more of the processes that underpin technological modernity will be handed over to AIs. We’ll be viewed, analysed, and served by them. Our medicines, buildings, and aircraft will be designed by them. We’ll be entertained by them, via the books, movies, and music they write. We’ll whisper our deepest secrets to them and listen to their replies.

In his weird and mesmeric final book, Novacene, the English technologist James Lovelock – responsible for the Gaia theory – argued that our machine overlords will one day view us as we do plants: a lesser, and largely inert, form of life.

Will we hand over the running of our world to these new kinds of intelligence, and live in the greenhouse they provide?

Or will we merge with them, and become something entirely new?

That’s a question for another day.

For now, strap in for 2023. It’s going to be a wild ride all over again.

Written by David Mattin:

Subscribe to his newsletter, New World Same Humans:

https://newworldsamehumans.com/

https://www.linkedin.com/in/david-mattin-82763413/

The Most Urgent Thing in The World

Digital ID

I didn’t expect this GMI to be so full of AI-related stuff but, as I have said numerous times, the world has changed... dramatically.

One of the single most important things to do when something this big comes at us this fast, is to consider the unintended consequences. I have discussed AI and robots before and their impact at humanity level in the longer-term.

For those of you spinning to catch up, I URGE you to read Homo Deus by Yuval Noah Harari in addition to a book I have just finished – Scary Smart: The Future of Artificial Intelligence and How You Can Save Our World by Mo Gawdat, the ex-head of Google X. These two books frame how big a societal/humanity issue AI is, as well as how humanity benefits if we get it right.

Personally, I think the singularity is inevitable. According to Gawdat, AI is a pandemic, a virus that is unstoppable. He is one of the world’s experts on AI and in his view, AI is going to be smarter than humans at every single task by 2029 as a result of the compounding power of exponentiality. Yup, eight years away...

And by 2049, using Ray Kurzweil’s estimates, Gawdat suggests that AI will be one billion times smarter than any human and the world will have shifted to where humans are the “dumb apes”, and AI is the apex lifeform. Gawdat suggests that by 2049 AI will have the relative intelligence of Einstein vs. humans being a fly. He also thinks it is impossible to stop due to game theory and the prisoner dilemma. I am certain he is directionally very right.

The US Election

However, the purpose of this article is not to discuss the consequences, but to examine something much more urgent and pressing: the US election in 2024.

Huh?

Let me explain...

In the next six months we will have both long- and short-form AI text-to-video. Put in any text and the AI will create a short video or even a full-length film. In itself this is ground-breaking but then consider deep fakes. Deep fakes are completely realistic images or videos that do not actually exist. Deep fakes also exist in audio too.

Check this deep fake video of Joe Biden supposedly signing “Baby Shark” that took the world by storm two months ago...

Right now, it’s a slow(ish) process to create these videos and audio files but with AI is scaling so fast, it will soon (within the next few months) be possible to create millions of videos in minutes.

If you don’t believe me, then watch this video of an interview that didn’t happen between Joe Rogan and Steve Jobs which was entirely created by AI...

Soon you will also see a gigantic sporting event (I can’t reveal it as I’m sworn to secrecy) between two of the most famous athletes in history, that never actually happened. It will play out in real time with no one knowing the outcome... and it will be ultra-realistic in every way embracing the full skill sets of both athletes.

This is an AI deep fake. Whilst it may at first appear innocuous, it is a much bigger deal than a simple sporting event. It is the complete transformation of everything we know to be real.

And it gets more concerning...

Add to this the fact that AI knows what political messages resonate with you and imagine that it can create millions of videos by Biden or whoever – that never existed – which are so realistic that you won’t question them. Yes, they will be used to expand traditional political messaging to scale at a rate we have never seen before, but they will also allow for the scaling of messages for nefarious means both by sovereign players, opposition parties, or just bad actors.

Also, remember that ChatGPT can write any article in any style. Ask it to write an article that Joe Biden is going to nuke Russia next week in the style of the New York Times and it will execute it perfectly. Just brand it as a NYT article and you won’t know the difference. If this happens millions of times on different articles, all across the web, then how can anyone police it?

Remember how big an issue Cambridge Analytica was? Well, this is just child’s play versus what will be possible by 2024.

Within six to twelve months, we will not be able to tell if any article, podcast, radio broadcast, photo or video is real or not. There will be such a plethora of fakes that all trust in the system will break down and totally shatter. Just think how bad social media is today and then add all these elements to it and you can start to see the size of the problem.

It’s not just going to be politics, but millions of scams will be virtually undetectable too. No one will know from whence the content came either as AI leaves few traces and can adapt and reinvent itself millions of times in no time at all.

How the fuck are we going to deal with this in a very close election where society is already massively divided, and the world is getting more and more polarised? How do you stop millions of personalised conspiracy theories all arising to undermine the credibility of anyone, or everyone?

Do you think that this knowledge has not be adopted by the Russians, the Chinese, the Iranians, the North Koreans, or anyone else with vast compute power? Do you honestly think this technology will not be used as a massive leverage tool against Western Society in order to destroy itself from within? Do you think that organised crime won’t use it or aren’t using it already?

Imagine a world of millions upon millions of fake videos, articles, podcasts bombarding us on every platform, intelligently learning what has the maximum impact by scanning all social media and then adapting that messaging even further. It is a true viral pandemic of false information.

Add to that the millions upon millions of AI bots that already agitate and spread false information and create societal discontent online, and imagine those bots deep learning and holding intelligent, but entirely false, conversations.

No person and no content online can soon be trusted (but may well be trusted by people that know no better). And I’m talking verysoon...

I cannot express how serious this is and how terrifying the outcomes. If you want to push a country to civil war, you can tear society apart with limited effort using AI and deep fakes. It is also very cheap to accomplish.

We have already tried to get social media and YouTube to police itself and that has failed; we simply don’t have the resources. Add to that the fact that powerful domestic state political actors on all sides influence social media themselves for their own gains and it becomes an impossible task.

We need a solution, and we need it FAST.

Enter Blockchain.

Digital ID has been something that many people have talked about. The discussions are currently centred around the need for online privacy, where we can complete KYC/AML but with that information remaining hidden by Zero Knowledge Proofs – ZK Proofs. This is a very clever form of consensus encryption that proves something to be true without showing the underlying data. ZK Proofs are at the cutting edge of cryptography and will play a very important role in our future.

So far, this non-state controlled digital ID has failed to scale because everyone is incentivised to not allow it to scale. Google, Facebook, Twitter, Reddit, YouTube, etc., all need you to reveal your ID and data so it can be monetised by them. If you were to own your own data, then you get to choose who gets your data and can therefore demand a share of the revenues.

I think this is coming but the system is stacked against it. “They” don’t want it.

But everyone can – and needs to – use this technology to fight AI fakes. If all content is authenticated on a blockchain, and all people too, then we know who is real and who isn’t. All fakes will not be identifiable on the blockchain, but all real, provable people, companies and content will be.

Here I am talking about every single article from every media outlet, every person on social media (we can also have multiple, anonymous online identities as long as the ZK Proof exists underlying them), every single video and podcast etc. Everything will need to be authenticatable and verifiable, and we will need social or distributed consensus to make it immutable and provably factual.

If everything can be cross-referenced on-chain in this manner then we stand a chance of avoiding the very bad outcomes that are coming at us in the next two years.

I have raised this issue many times on Twitter and in videos over the last six months and each time someone points out some small crypto project that is trying to solve this for ID privacy. There is zero-point-zero chance any of these can or will scale fast enough to solve the urgent needs I have highlighted. Add to that the fact that incumbent players don’t want it, and the task becomes near impossible.

My solution to this, which I am trying to get a general understanding of, is that the major incumbent players can and should use blockchain to verify all their own users and content. Yes, they will give up some of their data, but they will replace it with a Web3 social graph of users and content. This is the social graph of digital wallets which can be anonymous, or otherwise, that all users of the internet will have very soon. CBDCs will cement this without question, but it will be done at a private sector level too. Those wallets will give new types of information around online activity and consumer preferences, that can be monetised in different ways.